Published on: May 4, 2023 Updated on: May 22, 2023

Nudifying AI Tools and Other Problems with Deepfake Artificial Intelligence Technology

Author: Alex Tyndall

Within the past few years, we have seen an explosion in the utilities and capabilities of artificial intelligence (AI).

Never has it been easier for companies to automate their services – and smaller startups can now work to a similar capacity as their larger competitors without the additional manpower.

People living with disabilities can find new freedoms with apps like Seeing AI or interfaces that connect to your home’s lights and utilities, granting more autonomy to those who may otherwise have to rely on carers or additional support.

However, the development of AI technology has also led to an increase in copyright infringements as data trawlers scrape creative works from online creators; plagiarism and cheating allegations as students turn to essay-writing tools to get ahead in class; and the rise of the highly controversial ‘deepfake’ technology, which can digitally clone people without their consent.

A very concerning issue is the rise in deepfake pornography and AI nudifying tools, which are often used on a person’s image without their consent. We’ll be investigating the impact this can have on the victims, as well as the role deepfake has begun to play in what is commonly known as revenge porn.

Reader discretion is advised.

What is deepfake technology?

Deepfake technology has been around for decades in limited forms, with the program Video Rewrite from 1997 often credited for being the first example of deepfake as we know it today. It is sometimes used in filmography for the simple purpose of changing mouths and lines to alter the original content. For example, in 2022, it was used to edit over multiple profanities in the action-thriller movie Fall, and bring its rating down to PG-13.

Other instances of deepfake technology being used in mainstream media include:

- Cersei’s ‘walk of shame’, Game of Thrones. When the episode in season 5 first aired in 2015, fans were shocked to realize that the nude scene throughout the streets of King’s Landing was not acted entirely by Lena Headey. Rather, her face was CGI’d over the top of another actress’s body, Rebecca Van Cleave.

- Peter Cushing, Rogue One. In 2016, Lucasfilm released Rogue One, which acted as the prequel to the very first Star Wars movie, A New Hope. However, to do so successfully, the producers had to use CGI to bring Peter Cushing, who died in 1994, back to the silver screen. The effects drew certain levels of criticism due to their ‘uncanny-valley’ appearance. However, in 2020, YouTube channel Shamook uploaded a side-by-side comparison of Lucasfilm’s attempts with CGI, and the YouTuber’s own deepfake version. The difference is staggering, and, whilst not perfect, the deepfaked approach removed some of the more cartoonish elements that the CGI fell victim to. Shamook has since been hired by Lucasfilm as “Senior Facial Capture Artist”.

The overall intention is to alter a person’s face or body by superimposing different features onto a body, as though they were a canvas. Incredibly basic versions of this technology can be seen through features in FaceApp, like the face-swapping mode. Of course, these results are often deliberately exaggerated or outlandish for comedic effect.

Unfortunately, the nature of deepfake software has led to it being exploited for a number of less-than-decent means, including the act of ‘nudifying’ a person.

With greater access to online courses and resources to help people code and program, as well as the ease-of-use of some deepfake tools, this problem isn’t limited to a select group of people. Anyone, anywhere, has the potential to be exploited using this technology. All it requires is a few photos of your face, and less than a minute of audio of your voice.

Before we explore AI nudification further, let’s develop an understanding of how, exactly, deepfake technology operates.

How does it work?

Deepfake technology uses a combination of two machine learning (ML) models to achieve their output.

Variational autoencoders

Firstly, as with any ML process, data has to be fed into an algorithm so that the software can build up an accurate database to use later. This involves variational autoencoders (VAEs), which are made from a pair of connected neural networks (encoders and decoders).

When an image (often one of a face) is encoded, it is compressed into latent space, and the most important parts of the data are mapped into a numerical representation. When it is then decoded, the numerical data is transformed back into an image. Autoencoder success occurs when as much of the original image (before it was encoded) is recognizably visible after it has been decoded and reconstructed.

This can then be used to generate new sample data and develop better facial recognition.

Often, only distinctive features of a face make it through, leaving the rest of the picture blurry. This can cause an issue when you are trying to develop an authentic-looking photograph.

Generative adversarial networks

The second part of deepfake learning uses generative adversarial networks (GANs), which have the ability to generate far better photo-realistic images.

Here’s a (very) simplified look at how it works:

- A generator neural network creates new data from a series of numbers and produces an inauthentic image.

- A discriminator neural network is fed a mixture of authentic and inauthentic data, which it then evaluates.

- The discriminator designates a fractional score of authenticity prediction to the images, where 1 = absolute certainty of authenticity and 0 = absolute certainty of inauthenticity.

- A feedback loop allows the generator network to identify where it was caught out and train itself to avoid detection next time, creating more authentic-looking images.

When combined

When these parts are combined, AI is able to generate entirely new, authentic-looking images based on scraps of information from the dataset it learned from.

Currently, the largest amount of data available comes from people in the public sphere. This includes politicians, celebrities, and influencers, all of whom have hours of content available online, and thousands, if not millions, of photos of them.

However, social media has made it far more accessible for people to post hours of content of themselves online, too. Instagram pages become digital scrapbooks. YouTube channels show how we move and speak. Every piece of information that is uploaded can be used to train new datasets and create a more diverse, varied array of authentic material for AI to learn from.

Prevalent issues with deepfake technology

Whilst the majority of what people see about deepfakes on mainstream media comes in the form of comedic content, such as political figures playing Minecraft or CS:GO together, this is only the tip of the iceberg.

Deepfake pornography

In 2019, Amsterdam-based cybersecurity company Deeptrace Labs conducted a study into deepfake content. Of the 15,000 videos available at the time (a number which has since leapt into the millions as of 2023), a staggering 96% was pornographic material.

The majority of these videos contained images of women, specifically female celebrities, whose likenesses had been taken without their consent and superimposed onto the bodies of pornstars and other adult-material content creators.

Actresses Kristen Bell, Margot Robbie, Scarlett Johansson, and Emma Watson have spoken out against the use of their features when it comes to explicit content. But a resounding feeling of helplessness over the issue underpins many of their comments.

In an interview for The Washington Post from 2018, Johansson stated:

“Every country has their own legalese regarding the right to your own image, so while you may be able to take down sites in the U.S. that are using your face, the same rules might not apply in Germany. Even if you copyright pictures with your image that belong to you, the same copyright laws don’t apply overseas. I have sadly been down this road many, many times.

The fact is that trying to protect yourself from the internet and its depravity is basically a lost cause, for the most part.”

Recently, online influencers QTCinderella, Pokimane, and Sweet Anita expressed their horror and disgust after fellow Twitch streamer, Brandon “Atrioc” Ewing, accidentally revealed that he had been watching deepfake pornography on a website that used the women’s faces.

Following assertive efforts from QTCinderella and her coworkers, as well as an outpour of support from fans online, it was reported that the website responsible for these videos has since been shut down. However, the 28-year-old streamer commented that, since the incident went viral, she is still being sent the same explicit videos which users downloaded before the site was closed down.

AI-leveraged nudifiers

This is not a recent issue, by any means.

Johansson’s comments reach all the way back to 2010. Over a decade later, in 2021, English MP Maria Miller told the BBC that “it should be a sexual offense to distribute sexual images online without consent, reflecting the severity of the impact on people’s lives.”

Mrs Miller’s comments to the House of Commons in December 2021 called the decision to create and share a deepfake or a nudified image a “highly sinister, predatory and sexualised act.” She cited an incident from 2019 where Helen Mort, a poet and broadcaster from Sheffield, found pornographic images of herself online, which had been “taken from her social media, including photographs from her pregnancy.” They had been in circulation for years without her knowledge.

There are currently dozens of deepfake apps designed to make it ‘easy’ to create nude images. And, at present, the UK Parliament has not finished the process of passing an online safety bill which would make these apps and others like it much harder to access.

Revenge porn

The accessibility of deepfake services makes it significantly easier to perpetrate what is known as revenge porn. This is where an individual’s ex will upload genuine or artificial explicit material of their partner for the purposes of harassment, humiliation, or blackmail.

With the advent of AI nudification, authentic explicit images of an individual are no longer necessary. A malicious party could doctor images through the use of deepfake technology and achieve the same realistic effect that the possession of genuine images would produce.

This already horrifying act becomes even more nefarious when you understand that many websites which are specifically designed to host revenge porn encourage perpetrators to include the names and hometowns of their victims in online forums.

Whilst 48 States in the US currently have some form of ban on revenge porn, as of April 2023, only 4 of these States (California, New York, Georgia, and Virginia) include a ban on deepfake revenge porn.

General attitudes

It doesn’t matter if the images are crude or poorly constructed. The psychological impact of seeing your own face engaging in explicit acts – ones which you never consented to – can be traumatizing.

There is a pervasive and unfortunate sense of entitlement that many people on the internet adopt when it comes to the consumption of content in any form. The general idea is that if you, as a content creator, have put yourself in the public domain, you have become a commodity and have lost the rights to your image, and overall sense of self.

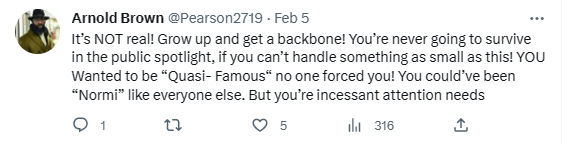

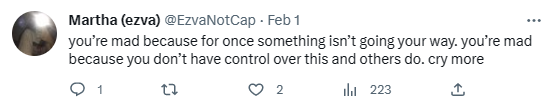

For example, take these Tweets that were published under QTCinderella’s initial comments about her situation.

It is immensely demoralizing to see how vitriolic certain people become when valid concerns are raised as to the dangers and negative impact of deepfake porn. A report from the Washington Post stated that “research indicates that victims of non-consensual pornography experience similar trauma to sexual assault survivors.”

And when AI nudifying websites almost proudly display that you can use X-ray to undress women, operate under the slogan ‘See any girl clothless with the click of a button’, and offer immediate step-by-step tutorials on how to achieve the best results, it seems as though there’s no end in sight to the cycle of exploitation and degradation.

Source omitted

How to keep yourself safe

The disturbing truth is that there is no one sure-fire way to keep yourself completely safe from the risk of fake nudes being circulated. Well, not without staying off the internet altogether. However, here are a few general online safety tips that can help you mitigate the risk.

- Keep your social media private. For those of us who aren’t online influencers, it’s important that we keep control of who is following our socials. Private and request-to-follow features on Instagram, Facebook, or Twitter give that one extra layer of protection against strangers being able to access your images.

- Don’t reveal private information online. This seems like an obvious one for anyone who has grown up using computers. Information such as your date of birth, address, and contact details should be kept to yourself when online. Additionally, don’t broadcast personal issues such as mental health struggles in your bio. Whilst it is important to raise awareness of certain struggles, be aware of what people can use against you.

- Don’t be afraid to ask for help. Revenge porn helplines exist all around the world, and more efforts are being made to tackle deepfake variants as well. If you discover that you have been the subject of such a case, call professionals who will help you however they can.

- Use multi-factor authentication. By increasing the layers of security between yourself and your images, the less chance there is of someone being able to hack in and steal your photos.

Final thoughts

Unfortunately, the moment deepfake issues are brought up, there is an almost predictable boom in search results on Google of people wanting to “research” it for themselves.

It’s a cruel, double-edged sword whereby attention needs to be brought to the issues surrounding deepfake pornography, nudifying tools, and the creation of non-consensual content. But the moment someone does, they run the risk of attracting the wrong crowd.

Ultimately, it is highly unlikely that we will ever be able to completely stop fake explicit image generation. Nevertheless, by lobbying and campaigning to senators, governors, or local MPs, we increase the chance of having legislation put in place to clamp down on deepfake tech once and for all.

AI can be a tool for good when used correctly. To find out more, and keep on top of the latest news, stay in touch with Top Apps.

Alex Tyndall

With a passion for exploring the latest apps and software, she brings a wealth of knowledge to her role as a professional writer at TopApps.ai.

Recent Articles

The Crowdstrike outage of 2024 sent shockwaves through the cybersecurity world. On July 19, a defect in a Windows content update brought down...

Read More

The Crowdstrike outage of 2024 sent shockwaves through the cybersecurity world. On July 19, a defect in a Windows content update brought down...

Read More

On July 15, 2024, Microsoft and CrowdStrike faced a global outage. This disruption affected millions of users worldwide. Azure services, Microsoft 365 apps,...

Read More