Published on: May 18, 2023 Updated on: March 6, 2024

ChatGPT Uncovered: A Deep Dive into Prompt Engineering

Author: Asiya Khatun

Welcome to our easy-to-understand guide on prompting and ChatGPT prompt engineering. If you’re into the world of AI and language models like ChatGPT, you should know that prompts play a huge role. They help unlock the power of large language models (LLMs) and make AI-generated content that’s just right for your needs.

Being a pro at prompt engineering is a game-changer when you’re working with ChatGPT and other LLMs. It lets you fine-tune AI responses for different situations. By mastering prompt engineering techniques, you can make more engaging, helpful, and personalized content for your audience.

Unveiling the world of LLM prompts

What are LLM prompts?

LLM prompts are like instructions we give to AI models like ChatGPT to create useful and on-point responses. These prompts help the AI system figure out what kind of output we want, the topic we’re talking about, and the tone we’d like.

Why do we use large language model prompts in AI systems?

The main reasons we use LLM prompts are to help AI systems:

- Get the context: Prompts give background info so AI can generate a response that matches what the user wants.

- Stick to the rules: Prompts can set specific requirements to make sure AI-generated content follows certain guidelines.

- Handle different situations: We can use different prompts to get content for all kinds of scenarios, from friendly chats to professional writing.

How prompts and ChatGPT work together

ChatGPT, a super smart AI large language model, depends a lot on the quality and details of prompts to make accurate and fitting responses. How well ChatGPT works is directly linked to the prompt engineering techniques we use. That’s why it’s so important for machine learning engineers and anyone who wants to train an LLM to learn and master writing effective prompts. Then, you get the most out of the AI model.

The intricacies of how LLM prompts work

Role of prompts in AI model training

Prompts play a vital role in training AI models like ChatGPT. During the training phase, AI models are exposed to vast amounts of text data paired with prompts and their corresponding responses. The models learn to understand language patterns, context, and relationships between prompts and responses. As they process this data, AI models generate their own understanding of how to create relevant and coherent responses based on the input prompts.

Connection between prompts and AI-generated responses

The relationship between prompts and AI-generated responses is similar to a question-and-answer scenario. When you give a prompt to an AI model, it searches through its vast knowledge to find patterns and context related to the input. The AI model then crafts a response that aligns with the information provided by the prompt. The quality and relevance of the response largely depend on two key principles: clarity and specificity.

Delving into the types of LLM prompts

Single-turn prompts

Single-turn prompts are simple, one-time input prompts that require only one response from the AI model. They typically involve a straightforward question or instruction and are used when a direct answer is expected. For example, asking an AI model to “provide a summary of XYZ book” would be a single-turn prompt.

Multi-turn prompts

Multi-turn prompts involve a back-and-forth conversation between the user and the AI model, with multiple exchanges. These prompts simulate a more interactive and dynamic conversation, allowing the AI model to engage in deeper discussions or provide more detailed information. For example, a user might ask a series of follow-up questions on a topic, and the AI model would provide relevant responses in a conversational manner.

Open-ended vs. closed-ended prompts

Open-ended prompts: These prompts encourage AI models to generate more creative responses. They typically involve questions or instructions that can have multiple correct or valid answers, allowing the AI model to explore various possibilities. For example, “Write a short story about a time-traveling cat” is an open-ended prompt.

Closed-ended prompts: These prompts require the AI model to provide a specific, focused response, often with only one correct or valid answer. They are typically used for factual inquiries or situations where a clear, concise answer is needed. For example, “What is the capital city of France?” is a closed-ended prompt.

Unpacking the components of an LLM prompt

Initial context

The initial context sets the stage for the AI model. It provides background info to help the AI understand the situation or topic. This info helps the AI generate a response that makes sense for the user’s needs.

Input message

The input message is the main part of the prompt. It’s the question, instruction, or statement the user wants the AI to respond to. For example, “Tell me about climate change” is an input message.

System message

System messages are optional but helpful. They give extra info or guidelines for the AI to follow. This can help create responses that follow specific rules or styles. For example, you could add “use a conversational tone” as a system message.

User message

User messages can be added to multi-turn prompts to simulate a conversation. They help the AI understand the user’s intent and give more detailed responses. For example, a user message could be a follow-up question or a reaction to the AI’s previous response.

Exploring the concept of role prompting

Definition and purpose

Role prompting is a technique where the user gives the AI a specific role to play. By doing this, the user helps the AI understand the context better and create responses that fit the role. For example, you could ask the AI to act like a tutor or a friend.

Benefits and drawbacks

Benefits:

- More focused responses: Role prompting helps the AI generate responses that fit the role, making them more relevant.

- Better user experience: Users can have more engaging and enjoyable interactions with the AI when it plays a specific role.

Drawbacks:

- Overly narrow responses: Sometimes, the AI might create responses that are too focused on the role, making them less helpful.

- Role confusion: If the role isn’t clear, the AI might struggle to understand the user’s intent and give poor responses.

Examples of role prompting

1. Tutor: “As a math tutor, explain the Pythagorean theorem to me.”

2. Travel agent: “As a travel agent, recommend the top 3 vacation destinations for a family with young children.”

3. Personal trainer: “As a personal trainer, suggest a workout plan for someone who’s new to exercise.”

The art of using prompts in AI training

How example prompts are used in training AI models

Example prompts are important for training AI models like ChatGPT. These examples help AI models learn different patterns, language rules, and how to create responses that match the user’s intent. By using lots of example prompts during training, AI models can improve their understanding and become better at generating useful responses.

Factors to consider when creating training examples

- Clarity: Make sure your example prompts are clear and easy to understand.

- Relevance: Choose examples that are related to the topics or situations the AI model will be used for.

- Variety: Use a wide range of examples to help the AI model learn different language patterns and contexts.

- Difficulty: Include examples with different levels of complexity to help the AI model adapt to various situations.

Giving voice to AI: Voice definition

Importance of voice in AI-generated content

Voice is an essential part of AI-generated content. It helps create a consistent tone and style, making the content feel more natural and engaging. A well-defined voice can also make the AI model’s responses more relatable and enjoyable for users. Are you creating informative responses? Consider a teaching style. Are you looking for responses that are more fun or silly? Build that into the prompts to get the output you want.

Strategies for defining an AI’s voice

Choose a target audience: Think about who you want the AI to communicate with, and tailor the voice to match their preferences.

Set a tone: Decide if the AI’s voice should be formal, casual, friendly, or something else.

Use specific language: Pick words and phrases that fit the AI’s voice and help create a consistent style.

Provide examples: Give the AI model examples of the desired voice to help it learn and adapt.

Examples of voice definition

Professional voice: Use formal language, avoid slang, and focus on providing accurate and helpful information.

Friendly voice: Use casual language, include conversational phrases, and make the AI’s responses feel warm and welcoming.

Creative voice: Encourage the AI to use colorful language, vivid descriptions, and think outside the box when generating content.

Recognizing and utilizing patterns

Recognizing and using patterns in prompts

Patterns in prompts help AI models recognize specific language structures, formats, or topics. By using patterns in your prompts, you can guide the AI model to generate responses that follow a certain structure or style. This can make the AI’s output more consistent and aligned with the desired outcome.

Benefits and drawbacks of using patterns

Benefits:

- Consistency: Patterns help create uniform responses across different prompts.

- Predictability: Patterns make it easier to anticipate the AI model’s output, leading to more reliable results.

Drawbacks:

- Rigidity: Overusing patterns can make the AI’s responses feel repetitive and less creative.

- Limitations: Relying too heavily on patterns might prevent the AI model from exploring new or innovative ideas.

Examples of patterns in LLM prompts

Q&A format: “Question: What is photosynthesis? Answer: Photosynthesis is the process by which plants convert sunlight, carbon dioxide, and water into glucose and oxygen.”

Step-by-step instructions: “Describe the process of making a cup of tea in three simple steps.”

List format: “List five benefits of regular exercise.”

The synergy of combining techniques

Merging role prompting, voice definition, and patterns

Combining techniques like role prompting, voice definition, and patterns can lead to more effective and engaging LLM prompts. By using these techniques together, you can guide the AI model to generate content that is not only contextually relevant but also stylistically consistent and well-structured.

Benefits of combining prompt techniques

Higher-quality output: Combining techniques can result in more focused, accurate, and engaging AI-generated content.

Greater control: Using multiple techniques gives you more influence over the AI’s responses, allowing you to fine-tune the output to better meet your needs.

Adaptability: Combining techniques helps the AI model adapt to different situations, audiences, and content requirements.

Examples of combined techniques in LLM prompts

Role prompting and voice definition: “As a friendly science teacher, explain the water cycle in simple terms.”

Role prompting and patterns: “As a personal trainer, list three exercises for each muscle group: legs, arms, and core.”

Voice definition and patterns: “In a professional tone, provide a step-by-step guide to preparing for a job interview.”

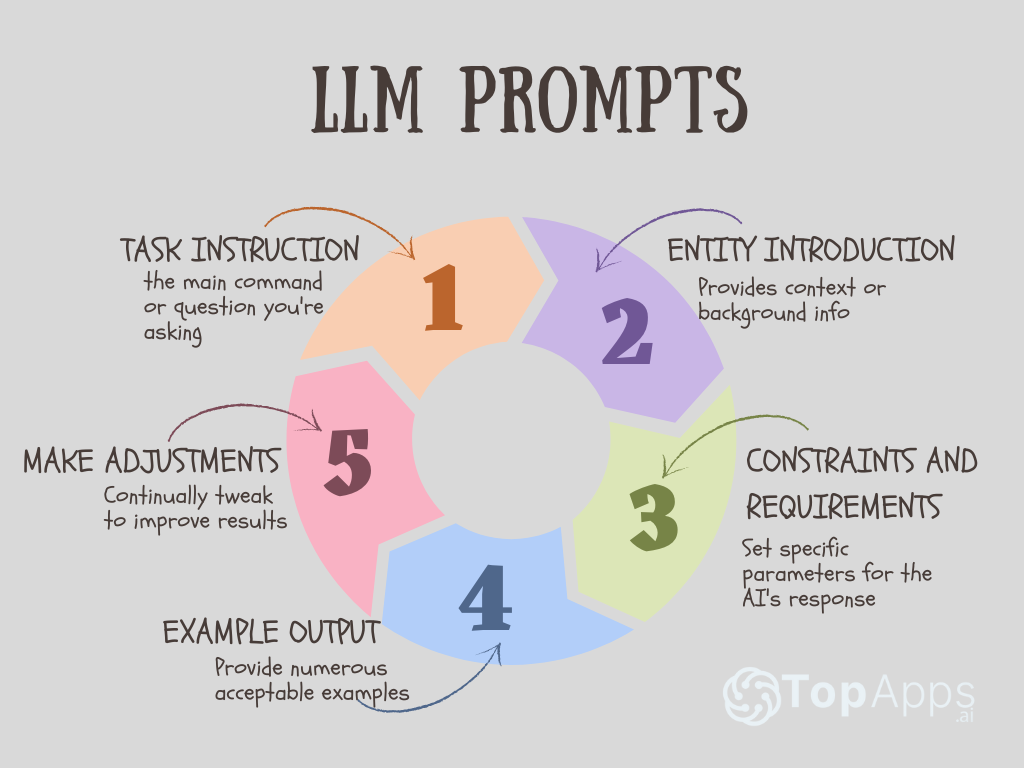

Dissecting the parts of a prompt

Task instruction

The task instruction tells the AI model what you want it to do and is the first step to take to craft effective prompts. It’s like the main command or question you’re asking. For example, “Write a summary” or “Explain this concept” are task instructions.

Entity introduction

The entity introduction is the part of the prompt that provides context or background info. This helps the AI model understand what the task instruction is about. For example, when asking the AI to “explain photosynthesis,” “photosynthesis” is the entity introduction.

Constraints and requirements

Constraints and requirements set specific rules or guidelines for the AI’s response. They help ensure the output follows certain criteria, like word count or tone. For example, you could add “in 100 words” or “using simple language” as constraints.

Example output

Example outputs are optional but useful. They give the AI model a clear idea of what you expect its response to look like. By providing numerous examples, you can help the AI model create content that matches your desired style or format.

Demystifying LLM parameters

Overview of LLM parameters

LLM parameters are settings you can adjust to influence the AI model’s behavior. These parameters control various aspects of the AI-generated content, like how creative or focused the output should be.

How parameters influence AI-generated content

Parameters can change the way AI models generate content in several ways. For example, you could adjust a parameter to make the AI model more cautious, leading to safer content. Or, you could tweak a parameter to encourage the AI model to explore a wider range of ideas, resulting in more creative output.

Examples of LLM parameters

- Temperature: This parameter controls how random or creative the AI’s output is. A lower temperature value leads to more focused and conservative responses, while a higher value encourages more varied and creative output.

- Max tokens: This parameter sets the maximum length of the AI-generated response. By limiting the number of tokens (words or word parts) in a response, you can ensure the output stays within a specific word count.

- Top-p: This parameter influences the AI model’s response diversity. A lower value makes the AI model more likely to choose common or expected words, while a higher value allows the model to generate more diverse and less predictable responses.

The experimental approach to prompt engineering

Experimentation plays a crucial role in prompt engineering. It helps you fine-tune your prompts and understand how different techniques and parameters impact the AI model’s output. Through experimentation, you can discover the best ways to create prompts that lead to accurate, engaging, and valuable AI-generated content.

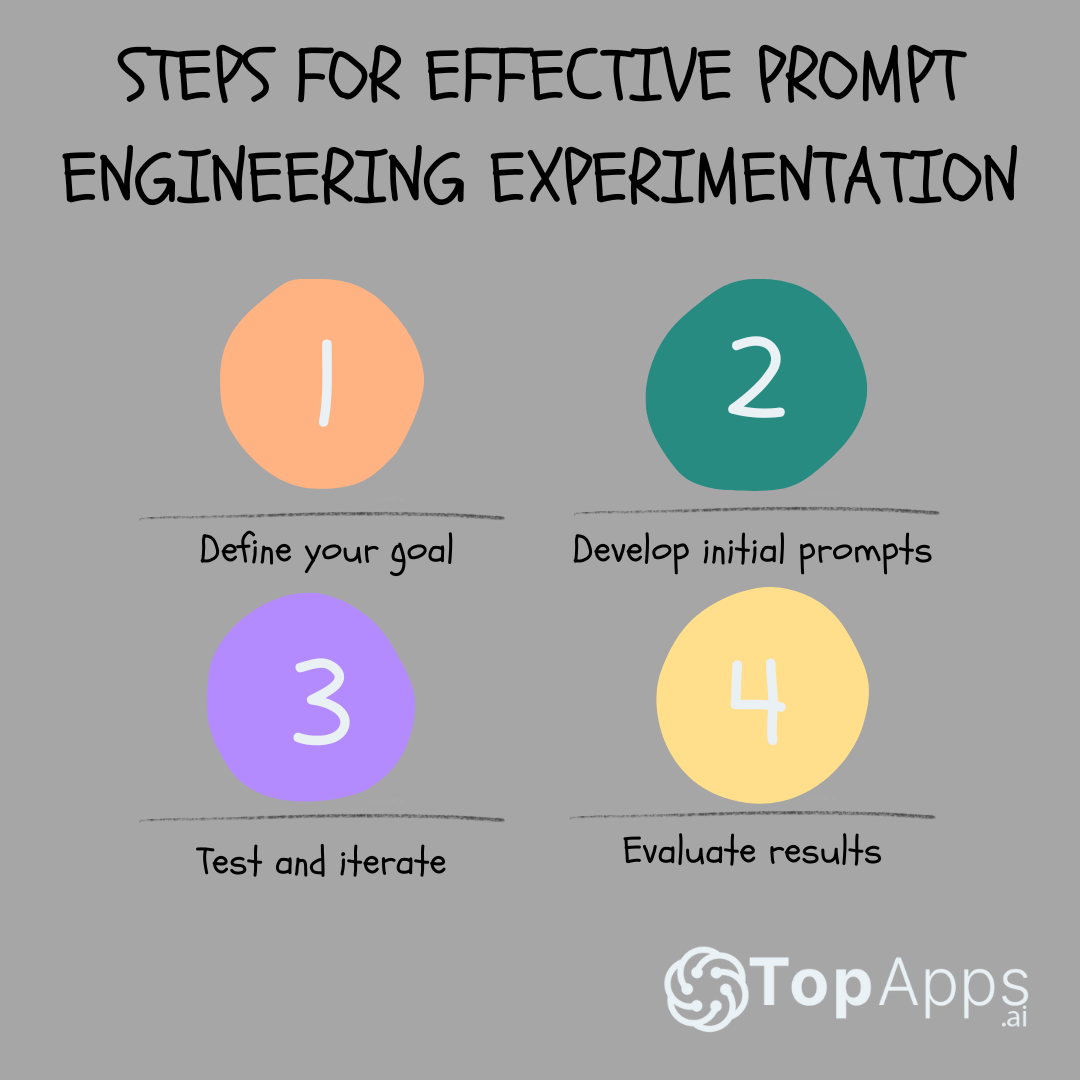

Steps for effective prompt engineering experimentation

- Define your goal: Start by identifying what you want to achieve with your AI-generated content. This could be anything from improving user engagement to providing helpful answers to user questions.

- Develop initial prompts: Create a set of initial prompts based on your goal. Use the techniques and parameters you’ve learned about, like role prompting, voice definition, and patterns, to craft your prompts.

- Test and iterate: Test your prompts with the AI model and analyze the generated content. Look for areas where the output could be improved, and make adjustments to your prompts accordingly. Repeat this process until you’re satisfied with the AI model’s responses.

- Evaluate results: Once you’ve refined your prompts, evaluate the AI-generated content based on your initial goal. Assess whether the output meets your expectations, and if necessary, go back to the drawing board and iterate further.

Tips for successful prompt engineering experiments

Be patient: Prompt engineering can be a trial-and-error process, so don’t get discouraged if your initial prompts don’t produce perfect results. Keep refining and experimenting to find the best approach.

Embrace creativity: Don’t be afraid to think outside the box when developing prompts. Sometimes, unconventional techniques or ideas can lead to the most effective AI-generated content.

Learn from others: Look for examples of successful AI-generated content and analyze the prompts used to create it. This can give you valuable insights into effective prompt engineering techniques and strategies.

Keep up with AI advancements: Stay informed about the latest developments in AI and language models. New features or improvements might impact your prompt engineering process and give you more tools to work with.

Collaborate: Share your prompt engineering experiences with others and learn from their successes and challenges. Collaboration can lead to new ideas and approaches that improve your AI-generated content.

By following these steps and tips, you can approach prompt engineering with a strong foundation and increase the chances of creating successful AI-generated content that meets your goals. Experimentation is key to unlocking the full potential of language models like ChatGPT, so be prepared to iterate and learn as you go.

Navigating the challenges and limitations of LLM prompts

Understanding AI-generated content limitations

AI-generated content has its limitations, and it’s important to be aware of them when using LLM prompts. Some common limitations include:

- Inaccurate or nonsensical responses: AI models might generate content that seems plausible but is factually incorrect or doesn’t make sense.

- Sensitivity to phrasing: The way you phrase a prompt can have a significant impact on the AI’s response, leading to inconsistent output.

- Lack of common sense or deep understanding: AI models may struggle with tasks that require common sense, empathy, or a deep understanding of complex topics.

Addressing biases and ethical concerns

AI models can inadvertently learn biases from the data they are trained on, which may lead to biased or inappropriate output. To address this issue:

- Be aware of potential biases in your training data and consider ways to minimize them.

- Monitor the AI-generated content for signs of bias or inappropriateness, and adjust your prompts or parameters accordingly.

- Stay informed about ongoing research and advancements in AI ethics and fairness to ensure the responsible use of LLM prompts.

Balancing prompt specificity with AI-generated content quality

Striking the right balance between prompt specificity and content quality can be challenging. If a prompt is too vague, the AI might generate content that is unrelated or unhelpful. On the other hand, if a prompt is too specific, the AI might struggle to generate a meaningful response. To find the right balance:

- Start with a clear and concise prompt that conveys your intent.

- Experiment with different levels of specificity to see which approach yields the best results.

- Adjust prompt techniques, like role prompting or patterns, to guide the AI model’s output without overly constraining its creativity.

By being aware of these challenges and limitations, you can make more informed decisions when using LLM prompts and improve the quality of your AI-generated content.

Comparing Prompt Techniques: A Table View

| Technique | Definition | Benefits | Drawbacks |

| Role Prompting | Assigning a role to the AI model to guide its responses | – Provides context for the AI model – Results in more engaging and relevant content – Can help overcome AI limitations in understanding | – Can be too restrictive – May require more iterations to achieve desired results |

| Voice Definition | Defining the tone, style, or personality of the AI model | – Ensures stylistic consistency – Aligns AI-generated content with the target audience – Enhances the user experience | – Can be challenging to define – May limit the AI model’s creative potential |

| Patterns | Using recognizable structures or formats in prompts | – Encourages consistency in AI-generated content – Increases predictability of output – Simplifies prompt creation | – Can lead to repetitive output – May prevent the AI model from exploring new ideas |

| Combined Techniques | Merging multiple prompt techniques for more effective prompts | – Improves output quality – Provides greater control over AI-generated content – Helps AI model adapt to various situations and audiences | – Requires more prompt engineering skill – May involve more complex prompt development |

Conclusion

In conclusion, the ability to write good prompts is a crucial aspect of working with large language models, and ChatGPT prompt engineering is no different. Understanding the different prompt techniques, such as role prompting, voice definition, and patterns, as well as how to combine them effectively, can help users systematically engineer good prompts to improve the quality of AI-generated content.

By experimenting with various prompts and parameters, and keeping in mind the challenges and limitations of AI-generated content, you can achieve more accurate, engaging, and valuable results. And you don’t have to be a machine learning or AI expert to give it a try.

As AI technology continues to advance, staying informed about the latest developments and collaborating with others in the field will help you harness the full potential of LLM prompts and create content that meets your goals and provides an excellent experience for your audience. Start experimenting with ChatGPT prompt engineering today!

Asiya Khatun

TopApps writer

Recent Articles

Create Exams in Seconds with AI Exam Generators Manually crafting exams is a time-consuming and tedious process for educators. But what if you...

Read More

Tired of staring at a blank page, struggling to find the right words for your essay? AI essay generators are revolutionizing the way...

Read MoreIntroduction AI blog automation is no longer a futuristic concept; it’s the 2024 reality that’s revolutionizing the blogging landscape. In a world...

Read More